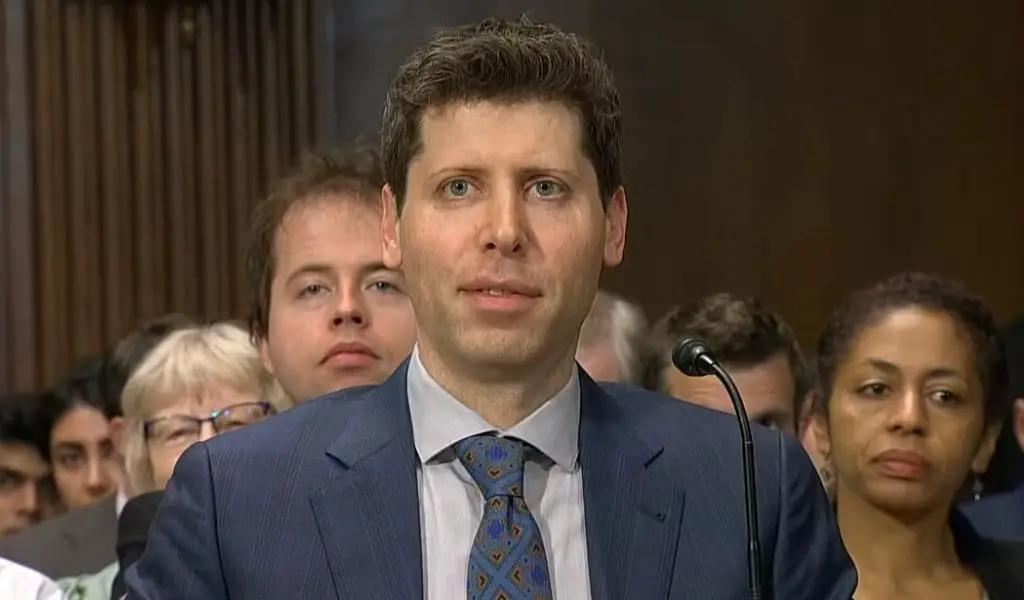

Sam Altman is the CEO of OpenAI and the person who created ChatGPT. If the growing field of artificial intelligence has a spokesperson, it would be him.

Altman appeared before the Senate Judiciary Committee on Tuesday. He spoke about the future of artificial intelligence in a broad and global way.

The incredible speed with which artificial intelligence has improved in just a few months has given Congress a rare bipartisan push to make sure Silicon Valley doesn’t come up with better ideas than Washington again.

Policymakers had heard the complaints coming from Palo Alto and Mountain View for decades. But as evidence mounts that relying too heavily on digital technology has significant social, cultural, and economic downsides, both sides have shown a willingness, for different reasons, to regulate the technology.

The integration of artificial intelligence into American society is a big test for lawmakers who want to show the high-tech industry that it can’t avoid the attention that other industries have long been accustomed to.

Also testifying with Altman were the chairwoman of IBM’s AI ethics board, Christina Montgomery, and New York University professor Gary Marcus, a critic of AI.

Here are some key parts of the meeting.

Congress did not comply with the moment on social networks

Sen. Richard Blumenthal, D-Connecticut, said in his opening remarks, which were made in part by artificial intelligence, that Congress is having trouble coming up with good rules for social media.

He said Congress had missed the mark on social media. “Now we have an obligation to do it with AI before the threats and risks become real.”

After the 2016 election, many Democrats said that Twitter and Facebook spread false information that helped Donald Trump win the presidency over Hillary Clinton. Republicans, on the other hand, said these same platforms were censoring material that was more to the right or “shadow banning” conservatives.

Political disagreements aside, it has become clear that social media is bad for teens, helps spread bigoted ideas, and makes people feel more nervous and alone. When it comes to social media companies that have become important to business and culture, it may be too late to address these issues. But members of Congress agreed there is still time to make sure AI doesn’t cause the same social problems.

Some jobs will walk away

IBM’s Montgomery said it’s clear that artificial intelligence poses risks to workers in many industries, even those thought to be safe from automation in the past.

She said: “Some jobs will disappear.”

Altman, who has become something of an “old statesman” in his field and seemed eager to assume that role on Capitol Hill Tuesday, had a different view.

I was talking about OpenAI’s new generative AI model, GPT-4. “I think it’s important to understand and think about GPT-4 as a tool, not a creature,” he said. He said these kinds of models were “good at doing tasks, not jobs,” so they would make people’s jobs easier without taking away their jobs.

This is not a social network. This is different

Altman knew the senators would regret losing the chance to control social media, so he talked about artificial intelligence as an entirely different development that would likely be much more important and useful than a source of cat memes (or racist messages, etc.). for the case).

“This is not social media,” he told her. “This is something different.”

Altman and Montgomery agreed that there needed to be rules, but at this early stage of the policy discussion, neither they nor the politicians could say what those rules should be.

“The age of AI cannot be another age of ‘Move fast and break things,’” Montgomery said, referring to an old Silicon Valley saying. “But we also don’t have to stop doing new things.”

The White House submitted a proposed AI Bill of Rights last year. It was meant to stop misinformation, discrimination, and other harmful things. Altman was one of the people Vice President Kamala Harris recently met with at the White House.

But so far, there has been no regulatory system, although everyone agrees that one is badly needed.

Humanity has taken a back seat

Marcus, a professor at New York University, emerged as the only AI critic on the panel, saying that “humanity has taken a backseat” as companies rush to create ever more complex AI models without having to take into account the possible risks.

Altman also recognized the potential severity of those risks. “I think this technology can go wrong if it goes wrong,” he added. It was a brave statement from one of technology’s most outspoken proponents, but it was also a welcome change from Silicon Valley’s mask of perhaps misleading optimism.

Subscribe to our latest newsletter

To read our exclusive content, sign up now. $5/month, $50/year

Categories: Technology

Source: vtt.edu.vn