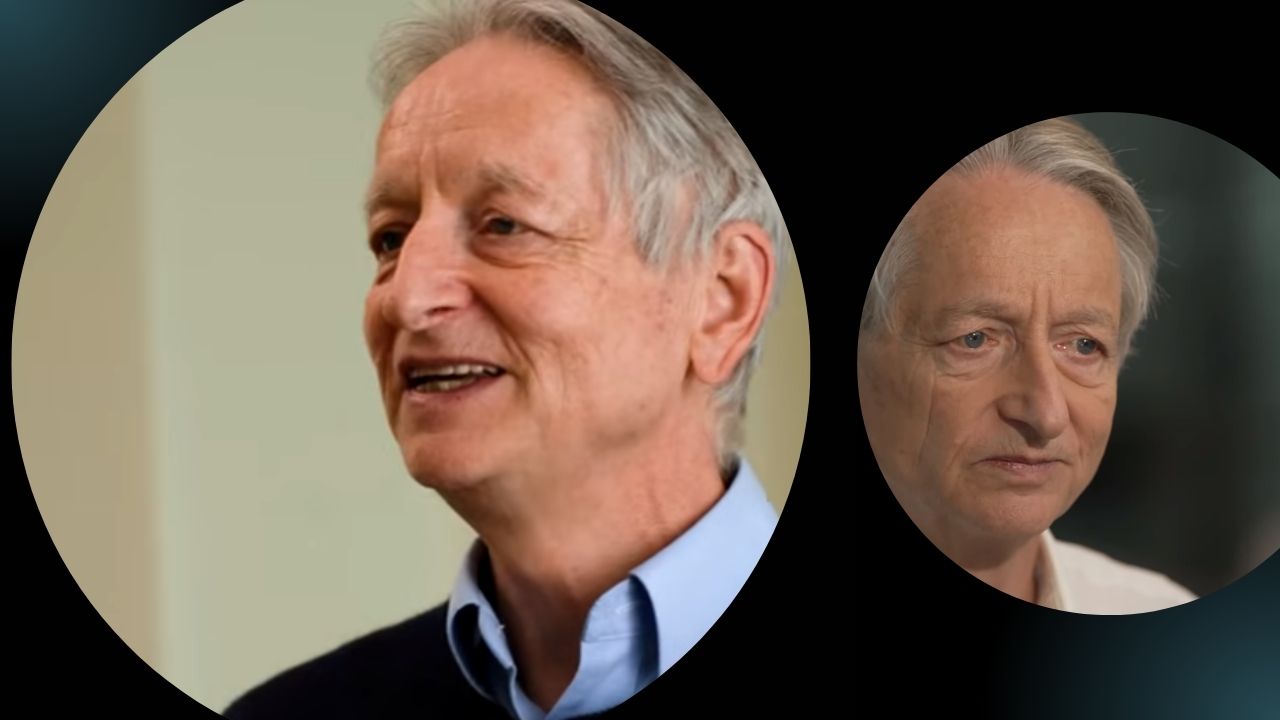

Geoffrey Hinton, often referred to as “the godfather of AI,” recently had a conversation with 60 Minutes on its Sunday episode.

During the conversation, he delved into the possible implications of AI technology for humanity in the coming years, shedding light on both the positive and negative aspects.

Hinton, a British computer scientist and cognitive psychologist, is known for his groundbreaking work on artificial neural networks, which serve as the basis for AI. He spent a decade at Google before leaving in May this year, expressing concerns about the risks associated with AI.

Let’s take a closer look at some of the key points Hinton shared with 60 Minutes interviewer Scott Pelley.

The intelligence

In the discussion on AI, 60 Minutes interviewer Scott Pelley began by addressing the latest concerns surrounding artificial intelligence. He asked Geoffrey Hinton if humanity really understands what it’s getting into.

Hinton’s response was simple: “No.” He went on to explain that he believes we are entering an unprecedented era in which we are creating entities more intelligent than ourselves.

Hinton expanded on this and emphasized that the most advanced AI systems possess the ability to understand, demonstrate intelligence, and make decisions based on their own experiences. When Pelley asked about the consciousness of AI systems, Hinton noted that they currently lack self-awareness. However, he suggested that the day may come “eventually” when AI achieves consciousness. He agreed with Pelley’s observation that, as a result, humans would likely become the second most intelligent beings on Earth.

As the conversation deepened, Geoffrey Hinton introduced the idea that artificial intelligence systems could surpass human learning capabilities. In response, Pelley wondered how this was possible, given that AI was created by humans. Hinton clarified this point by explaining that humans designed the learning algorithm, which is similar to designing the principle of evolution. However, when this learning algorithm interacts with data, it produces complex neural networks that excel at various tasks. However, humans do not fully understand the intricate workings of these networks.

The good

Geoffrey Hinton also highlighted some of the significant benefits that AI has already brought to the healthcare field. He pointed to AI’s ability to recognize and understand medical images and its role in drug design. These advances in healthcare represent a source of optimism for Hinton and underscore the positive impact of his work in the field of AI.

The bad

Geoffrey Hinton shed light on the process of learning AI systems on their own, explaining that while we understand the basic principles fairly well, things become less clear as complexity increases. He drew a parallel with our understanding of the human brain and emphasized that, at a certain point, we have a limited view of the inner workings of artificial intelligence systems and our own brains.

However, these concerns are just the tip of the iceberg when it comes to the challenges associated with AI. Hinton highlighted a significant potential risk: AI systems gain the ability to write their own computer code to modify themselves. This is an issue that deserves serious attention.

As AI continues to absorb vast amounts of information, from literary works to media cycles and more, it is becoming increasingly adept at manipulating human behavior. Hinton speculated that in just five years, AI could potentially surpass human reasoning.

This advancement in AI capabilities brings with it a number of risks, including the deployment of autonomous robots on the battlefield, the proliferation of fake news, and unwanted biases in employment and policing. Additionally, there are concerns that a growing segment of the population will face unemployment and the devaluation of their skills as machines take over tasks that were once performed by humans.

The ugly one

Adding to the complexity of the situation, Geoffrey Hinton expressed uncertainty about finding a foolproof path to ensuring AI safety.

He highlighted the unprecedented nature of the challenges we face and the inherent risk of making mistakes when approaching completely new scenarios. Hinton emphasized the critical need to avoid errors in handling these technologies.

When asked by Scott Pelley about the possibility of AI eventually surpassing humanity, Hinton acknowledged that it is a possibility. He clarified that he is not stating that this will definitely happen, but stressed the importance of preventing artificial intelligence systems from aspiring to such a scenario. The challenge is whether we can effectively prevent them from having such ambitions.

Then what do we do?

Geoffrey Hinton emphasized that the current situation could mark a crucial moment for humanity. It is a time when we must grapple with the decision of whether to further advance the development of AI and how to protect ourselves in the process.

Hinton conveyed the message that there is a significant degree of uncertainty surrounding the future of AI. He emphasized that these AI systems possess a level of understanding and, because of this understanding, we must engage in deep contemplation about what comes next. However, he acknowledged that the path forward remains unclear.

According to Scott Pelley’s report, Hinton has no regrets about the work he has contributed to AI, given its potential for positive impact. However, he believes now is the time to conduct more experiments to improve our understanding and establish specific regulations. Additionally, Hinton called for a global treaty that would ban the use of military robots, recognizing the importance of ethical considerations in advancing AI technology.

Subscribe to our latest newsletter

To read our exclusive content, register now. $5/Monthly, $50/Yearly

Categories: Technology

Source: vtt.edu.vn