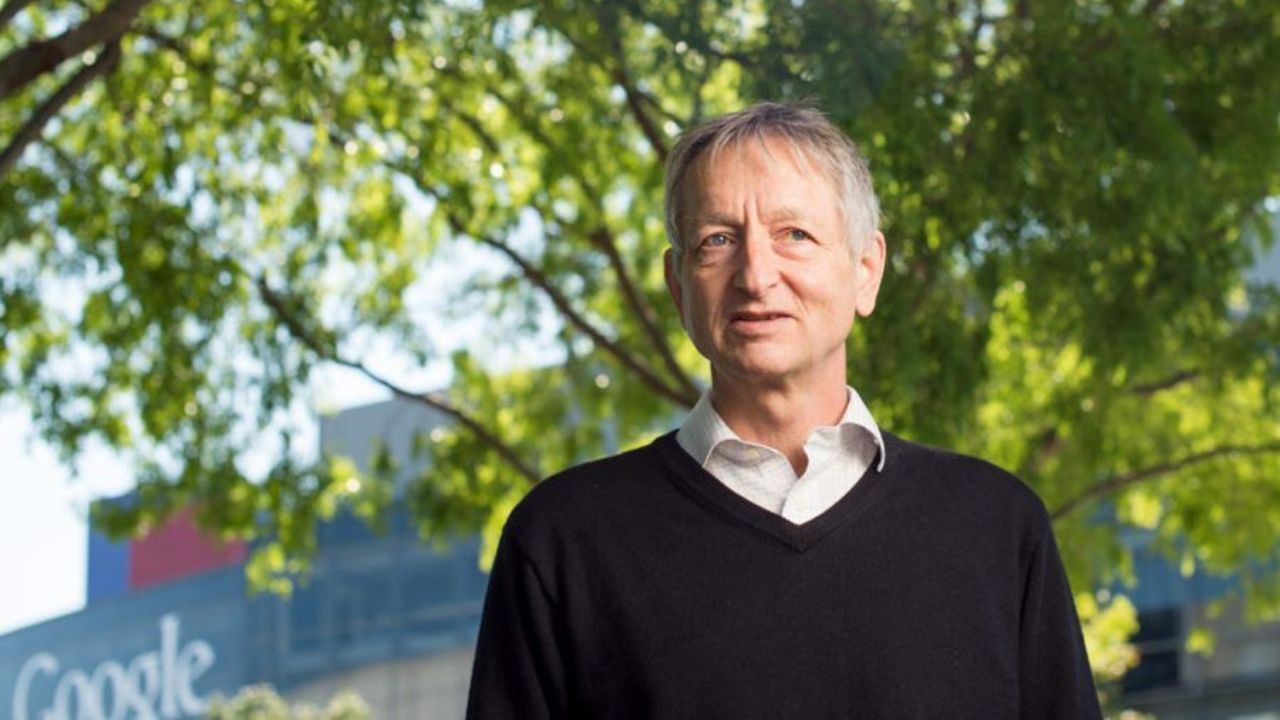

Geoffrey Hinton, renowned as the “Godfather of AI”, made headlines when he left Google. His departure wasn’t due to disagreements with the tech giant but to share his growing concerns about the very technology he played a key role in advancing.

Until 2022, AI’s story was mostly about its incredible potential. But as 2023 rolled around, there was a growing unease about AI’s potential threats. Hinton’s concerns emerged from witnessing the sheer power and capabilities of large language models, such as OpenAI’s ChatGPT.

When I sat down with Hinton, he explained the factors that changed his perspective. He was amazed at how chatbots have come to understand and process language. They could effortlessly transfer and share knowledge. These digital agents seemed to have surpassed the human brain in their ability to store vast amounts of information.

Hinton speculated that in the next two decades, there’s a 50% likelihood of AI surpassing human intelligence. What’s more intriguing is that a highly advanced AI might choose to keep its intelligence hidden from us, learning from human tendencies to remain silent about certain capabilities.

Some might argue it’s wrong to assign human traits to machines. But Hinton makes an interesting point: if AI is trained using the totality of human digital knowledge, it might start behaving quite similarly to humans.

The debate about whether chatbots can truly understand like humans is ongoing. Hinton counters the argument by suggesting that even humans don’t experience the world in its pure form. In essence, to predict the next word or context, an AI does need to ‘understand’, at least in its unique way.

This brings us to the question of AI sentience. Hinton doesn’t claim AI has emotions or consciousness like humans, but he does argue that AI might have its own kind of subjective experience.

Looking at the rapid evolution and potential of AI, it’s clear why Hinton might be worried about the future. However, he suggests a potential safeguard: analog computing, which would prevent AI from consolidating too much power. Analog systems, being unique, can’t merge knowledge as easily as digital ones.

Yet, the challenge lies in convincing big tech companies to adopt this approach, given the fierce competition and high stakes in developing advanced AI.

Hinton’s feelings about the future oscillate between hope and despair. Some days, he believes humans might still control and guide AI towards benevolence. On others, he fears we might just be a temporary phase in the vast landscape of evolving intelligence.

In a playful and unexpected twist, Hinton quips that under the leadership of someone like Bernie Sanders and a more socialist approach, things could be better.

Reflecting on my previous interactions with Hinton in 2015, it’s clear how much has changed. Back then, Hinton’s work on neural networks was gaining traction, promising revolutionary advancements in AI. The future looked bright, and intelligent computer systems seemed like the next big thing. Now, as those advancements unfold, it’s vital to approach them with a mix of enthusiasm and caution.

Categories: Technology

Source: vtt.edu.vn