Just over two months have passed since GPT-4 came out, but users are already looking forward to GPT-5. In a number of tests and qualitative reviews, we have already seen how capable and powerful GPT-4 is. It’s even better now that it has many new features, like ChatGPT apps and the ability to browse the internet.

Users are now waiting to find out more about the upcoming OpenAI model, GPT-5, the chance of AGI, and other things. Follow our guide below to learn more about when GPT-5 will come out and what other features you can expect.

GPT-5 Release Date

When GPT-4 was launched in March 2023, OpenAI’s next-generation model was expected to be released by December 2023. Additionally, Siqi Chen, the CEO of Runway, tweeted that “gpt5 is scheduled to complete training this December.” When asked if OpenAI is training GPT-5, however, OpenAI CEO Sam Altman stated during a speech at MIT in April, “We are not and won’t be for some time.” Therefore, the rumour that GPT-5 will be released by the end of 2023 has been debunked.

Experts speculate that OpenAI may release GPT-4.5, an intermediate release between GPT-4 and GPT-5, in October 2023, similar to GPT-3.5. Supposedly, GPT-4.5 will introduce the multimodal capability, or the capacity to analyze both images and texts. During the GPT-4 Developer livestream back in March 2023, OpenAI announced and demonstrated GPT-4’s multimodal capabilities.

Aside from that, OpenAI has a number of issues to resolve with the GPT-4 model before beginning work on GPT-5. Currently, GPT-4’s inference time is quite lengthy and its operation is quite costly. GPT-4 API access is still difficult to obtain. In addition, OpenAI has only recently made available ChatGPT modules and internet browsing capabilities, which are still in beta. It has yet to release Code Interpreter for all paying users, which is currently in the Alpha stage.

Despite the fact that GPT-4 is quite potent, I believe OpenAI recognizes that compute efficiency is one of the most important aspects of operating a model sustainably. And when you add new features and capabilities to the equation, you have to deal with a larger infrastructure while ensuring that all checkpoints are operating reliably. Assuming government agencies do not place a regulatory roadblock, GPT-5 is likely to be released in 2024, around the same time as Google Gemini, if we take an estimate.

GPT-5 Features and Capabilities (Expected)

In this part, we are going to talk about GPT-5 Features and Capabilities:

Reduced Hallucination

Industry insiders are abuzz with speculation that GPT-5, the latest iteration of the popular language processing AI, may be on the cusp of achieving Artificial General Intelligence (AGI). Further details on this development will be explored in depth at a later time. The upcoming GPT-5 technology is expected to improve efficiency, reduce inference time, minimize hallucinations, and offer numerous other benefits. Hallucination is a significant factor that contributes to the scepticism of many users towards AI models.

OpenAI has reported that GPT-4 has outperformed GPT-3.5 by 40% in internal adversarially-designed factual evaluations across all nine categories. According to recent reports, GPT-4 has undergone significant improvements in its ability to identify and avoid inaccurate and disallowed content. The latest updates have resulted in an impressive 82% reduction in the likelihood of GPT-4 responding to such content. The accuracy tests across various categories have shown that it is on the verge of reaching the 80% mark. A significant advancement has been made in the fight against hallucination.

OpenAI is anticipated to achieve a significant milestone with the upcoming release of GPT-5, as it is expected to reduce the occurrence of hallucinations to below 10%. This development would greatly enhance the reliability of LLM models. The GPT-4 model has been recently utilized by the user for various tasks, and thus far, it has provided solely factual responses. According to experts, there is a strong possibility that GPT-5 will exhibit even fewer instances of hallucination compared to its predecessor, GPT-4.

Compute-efficient Model

As per recent reports, it has been revealed that the operational cost of GPT-4 is relatively high, estimated at $0.03 per 1K tokens. Additionally, the inference time for GPT-4 is also comparatively longer. According to recent reports, the GPT-3.5-turbo model is significantly more cost-effective than its successor, the GPT-4, with a cost of only $0.002 per 1K tokens compared to the latter’s higher price point. The reason behind the high cost of GPT-4 is its extensive training on a massive 1 trillion parameters, which necessitates a costly computing infrastructure. According to our recent report on Google’s PaLM 2 model, it has been discovered that the model’s smaller size allows for faster performance.

According to a recent report from CNBC, PaLM 2 has been trained on 340 billion parameters, a significantly smaller number than the large parameter size of GPT-4. According to Google, the key to creating great models is through research creativity, and they even stated that bigger is not always better. In order to achieve compute optimization for its upcoming models, OpenAI must explore innovative methods to decrease the model size without compromising the output quality.

OpenAI’s revenue is significantly derived from enterprises and businesses. As a result, it is imperative that the upcoming GPT-5 not only be cost-effective but also capable of delivering output at a faster rate. Developers have expressed frustration with the GPT-4 API, citing frequent unresponsiveness and the need to resort to using the GPT-3.5 model for production purposes. OpenAI is likely aiming to enhance the performance of its forthcoming GPT-5 model, particularly in light of the recent release of Google’s PaLM 2 model, which boasts significantly faster speeds. Interested parties can currently test out the PaLM 2 model.

Multisensory AI Model

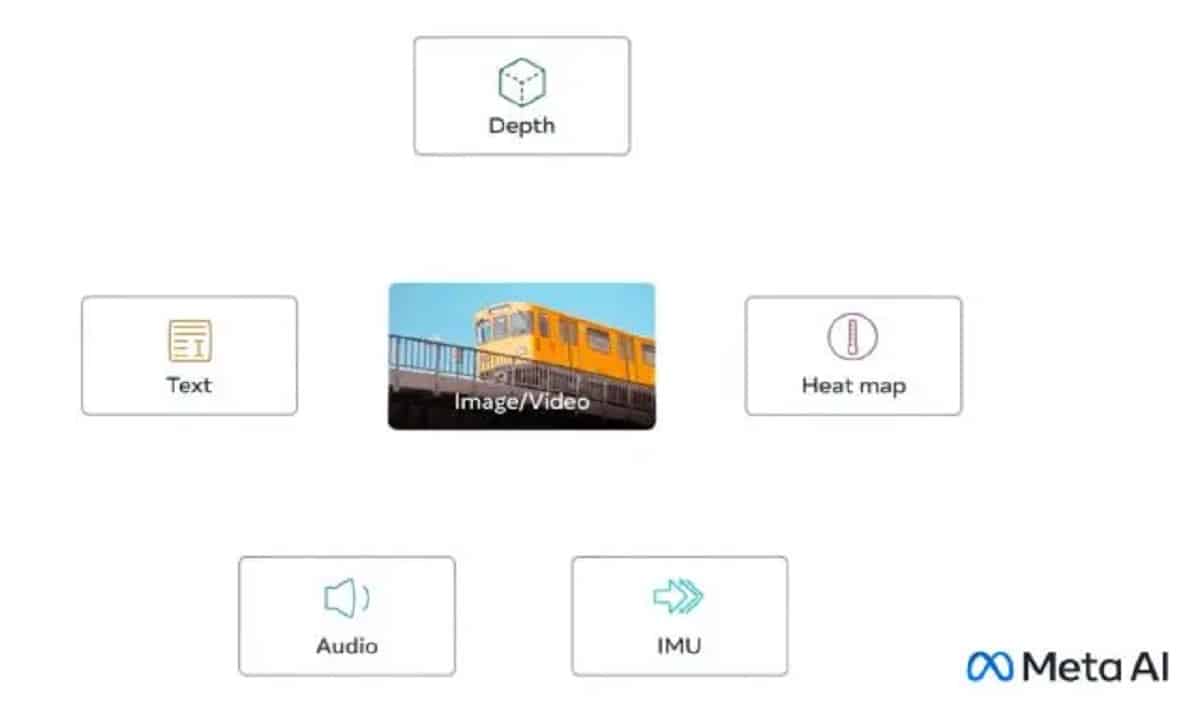

The recently announced GPT-4 has been touted as a cutting-edge multimodal AI model. However, it is important to note that the model is currently limited to processing only two types of data: images and text. OpenAI is reportedly considering the addition of a new capability to GPT-4 in the coming months, although it has not yet been implemented. OpenAI’s GPT-5 could potentially make significant strides in becoming truly multimodal. The subject matter at hand encompasses a range of elements including text, audio, images, videos, depth data, and temperature. A new technology is being developed that has the capability to interconnect data streams from various modalities, resulting in the creation of an embedding space.

Meta has recently launched ImageBind, an AI model that merges data from six distinct modalities. The company has made this model available for research purposes as an open-source project. OpenAI has remained tight-lipped about its developments in this area, but sources suggest that the company has made significant progress in building robust foundation models for vision analysis and image generation. OpenAI, a leading artificial intelligence research laboratory, has recently unveiled two groundbreaking technologies. The first is CLIP (Contrastive Language-Image Pretraining), which is designed to analyze images. The second is DALL-E, a popular alternative to Midjourney, which can generate images from textual descriptions. These cutting-edge developments are poised to revolutionize the field of AI and have garnered significant attention from experts and enthusiasts alike.

The field remains a subject of continued investigation, with its potential uses yet to be fully understood. Meta has announced that its platform can be utilized for the development and production of engaging virtual reality content. The impact of OpenAI in the field of AI and the potential for increased AI applications across various multimodalities with the release of GPT-5 remains to be seen and requires further observation.

Long Term Memory

OpenAI has recently launched GPT-4, which allows for a maximum context length of 32K tokens. The cost for this service is $0.06 per 1K token. In just a few months, there has been a rapid transformation from the standard 4K tokens to 32K tokens. Anthropic has reportedly expanded the context window of its Claude AI chatbot from 9K to 100K tokens. The upcoming release of GPT-5 is anticipated to offer extended support for long-term memory through a significantly increased context length.

The development of AI characters and long-lasting memories of personal experiences may be facilitated by this technology. In addition, it is possible to load collections of books and text documents into a unified context window. The integration of long-term memory support in AI technology has the potential to pave the way for a multitude of new applications. One such advancement is the development of GPT-5, which could facilitate the realization of these possibilities.

GPT-5 Release: Fear of AGI?

Sam Altman, a prominent figure in the tech industry, penned a blog post in February of 2023 discussing the potential benefits of artificial general intelligence (AGI) for the betterment of humanity. Artificial General Intelligence (AGI) is a new generation of AI systems that are designed to be generally more intelligent than humans, as its name implies. Rumours are circulating that OpenAI’s highly anticipated GPT-5 model may achieve artificial general intelligence (AGI). While unconfirmed, there appears to be some validity to these claims.

Autonomous AI agents, such as Auto-GPT Ai and BabyAGI, have already been developed. These agents are based on GPT-4 and have the ability to make independent decisions and draw logical conclusions. There is a possibility that GPT-5 may incorporate some form of AGI in its deployment.

According to a recent blog post by Altman, the company believes in the importance of constantly learning and adapting by utilizing less powerful versions of technology to avoid high-risk situations where getting it right the first time is crucial. Altman also acknowledges the significant risks associated with navigating powerful systems like AGI. Sam Altman, a prominent figure in the tech industry, has called on US lawmakers to establish regulations for emerging artificial intelligence (AI) systems. Altman made this plea prior to a Senate hearing on the subject.

During the hearing, Altman expressed his concern, stating that the technology has the potential to go drastically wrong. “We aim to express our views openly,” said the speaker. In a recent statement, he expressed his desire to collaborate with the government in order to prevent such an occurrence. OpenAI has been advocating for regulations on advanced AI systems that possess immense power and intelligence. Altman’s call for safety regulation pertains specifically to highly potent AI systems, and not to those developed by small startups or open-source models.

It’s worth mentioning that Elon Musk and other important figures, including Steve Wozniak, Andrew Yang, and Yuval Noah Harari, among others, called for a halt to large-scale AI studies in March 2023. Since then, there has been widespread opposition to AGI and newer AI systems that are more powerful than GPT-4.

If OpenAI does add AGI capability to GPT-5, the public release will be delayed even further. Regulation would undoubtedly take effect, and activities related to safety and alignment would be rigorously evaluated. The good news is that OpenAI already has a strong GPT-4 model in place, and it is constantly adding new features and capabilities. There is no other AI model that comes close, not even Google Bard, which is based on PaLM 2.

OpenAI GPT-5: Future Stance

OpenAI has become increasingly secretive about its operations since the release of GPT-4. It no longer shares open-source research on the training dataset, architecture, hardware, training computation, and training procedure. It’s been a strange 180-degree turn for a corporation that was formed as a nonprofit (now capped profit) on the ideas of unfettered collaboration.

In an interview with The Verge in March 2023, Ilya Sutskever, the chief scientist of OpenAI, stated, “We were mistaken. We were completely mistaken. If you believe, as we do, that AI — AGI — will become enormously, insanely powerful at some point, then it just does not make sense to open-source. It’s a poor idea… I strongly think that in a few years, everyone will realize that open-sourcing AI is simply not a good idea.”

It is now evident that neither the GPT-4 nor the next GPT-5 will be open-source in order to compete in the AI race. However, another massive firm, Meta, has taken a different approach to AI research. Meta has been gaining popularity in the open-source community by releasing many AI models under the CC BY-NC 4.0 license (research only, non-commercial).

With the widespread acceptance of Meta’s LLaMA and other AI models, OpenAI has shifted its position to open source. According to recent rumours, OpenAI is developing a new open-source AI model that will be distributed to the public in the near future. There’s no word on its capabilities or how competitive it will be against GPT-3.5 or GPT-4, but it’s a good change.

In summary, GPT-5 will be a frontier model that will push the boundaries of what AI is capable of. GPT-5 will almost certainly include some type of AGI. And if that is the case, OpenAI must brace itself for more regulation (and possibly prohibitions) around the world. The safe bet for the GPT-5 release date is sometime in 2024.

Subscribe to Our Latest Newsletter

To Read Our Exclusive Content, Sign up Now. $5/Monthly, $50/Yearly

Categories: Technology

Source: vtt.edu.vn